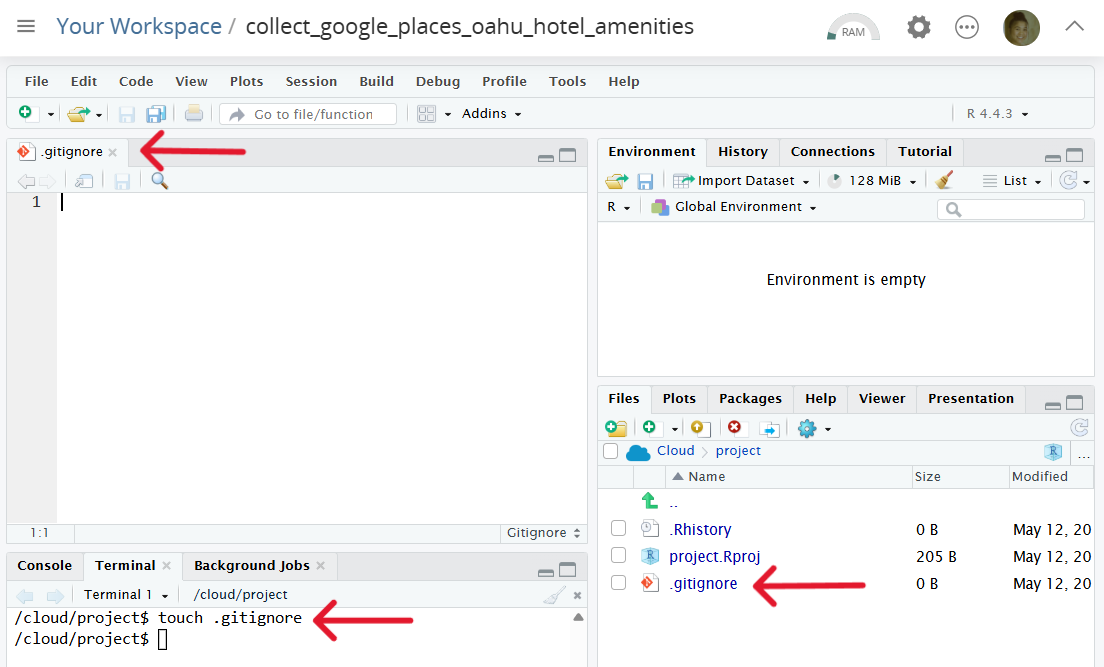

2. Open the .gitignore file, add the files and save

.Renviron

.envAlemarie Ceria

We want to collect the following data:

Use Git/GitHub for version control and collaboration

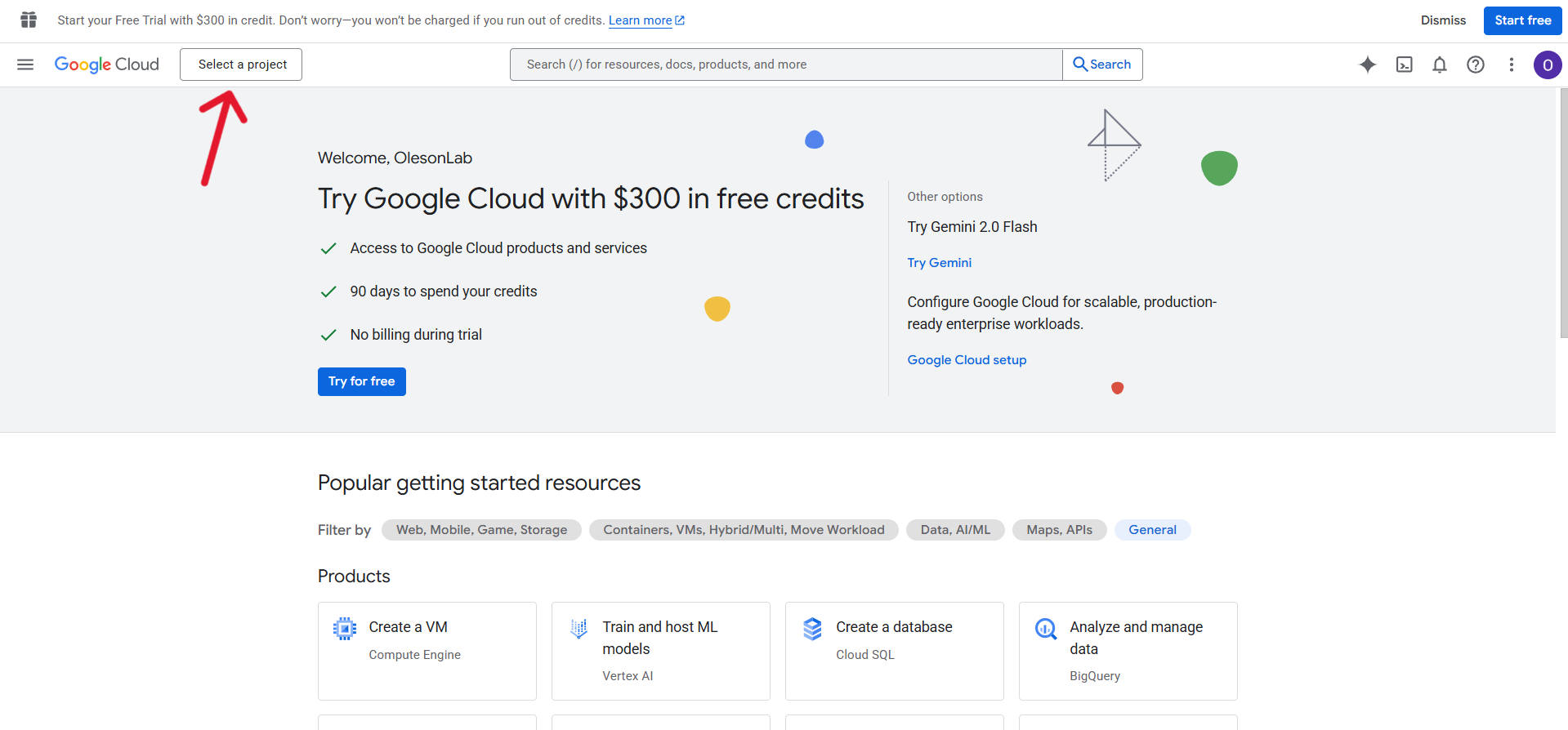

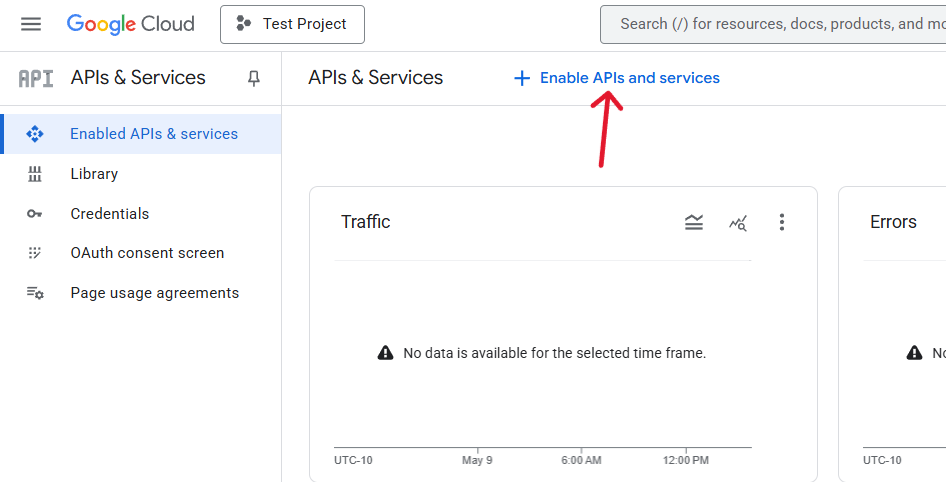

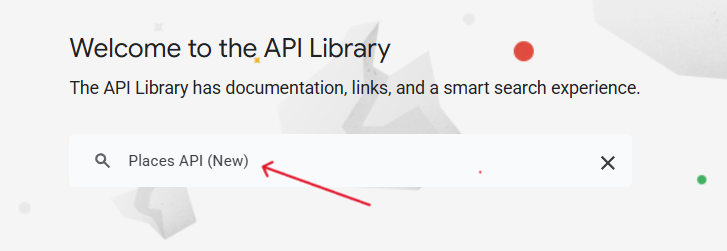

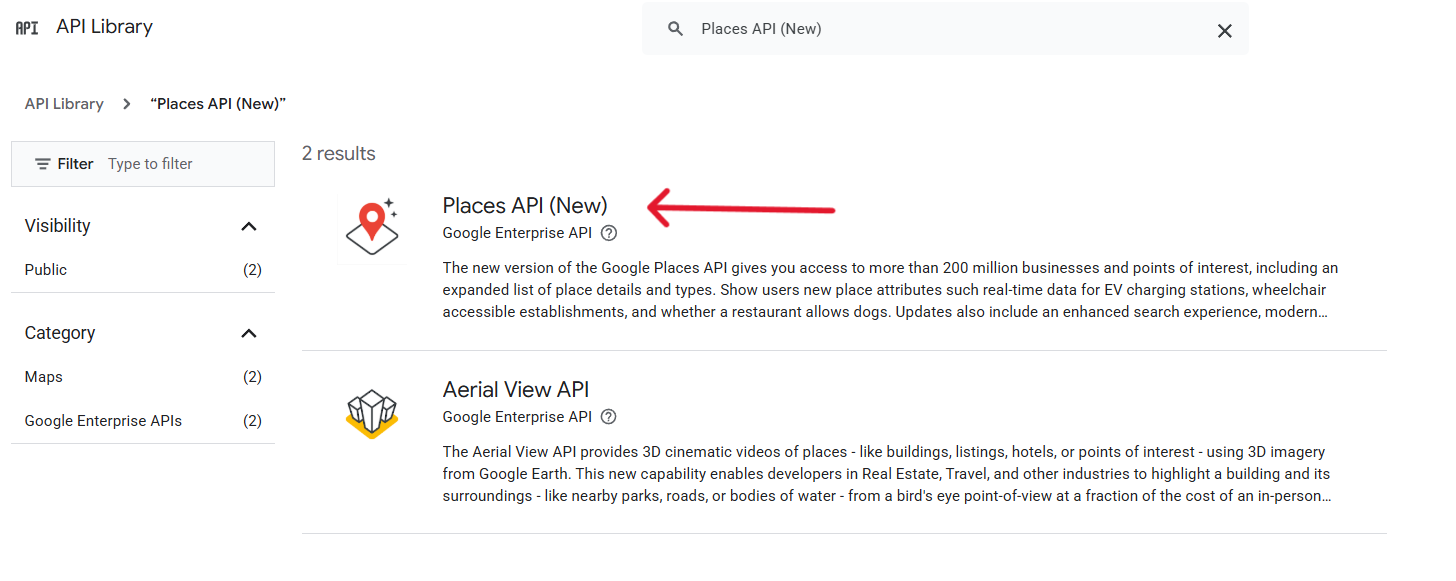

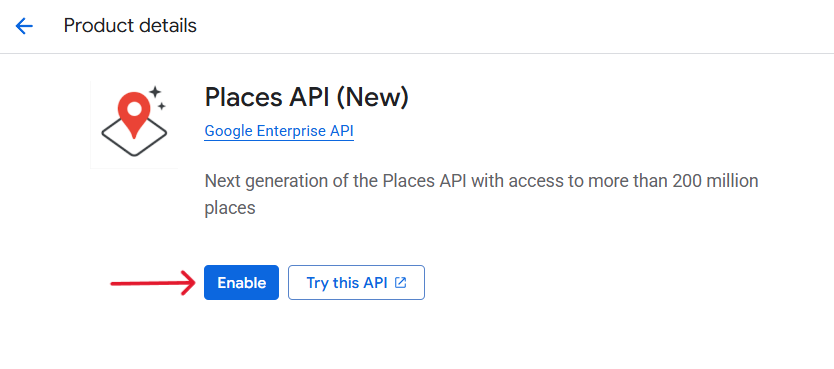

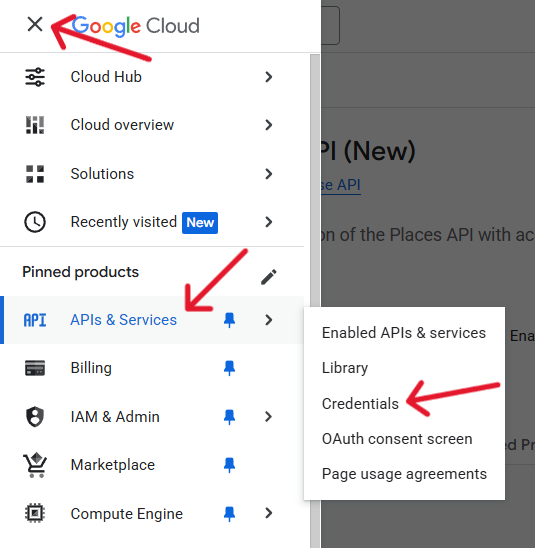

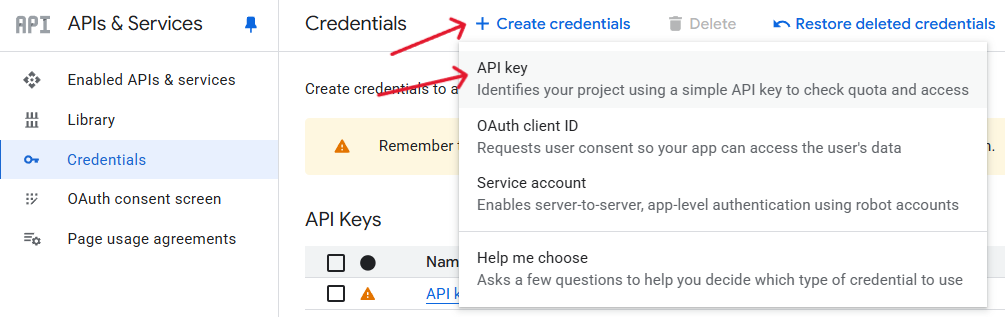

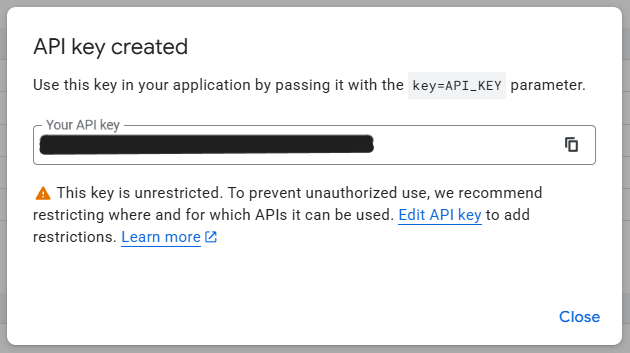

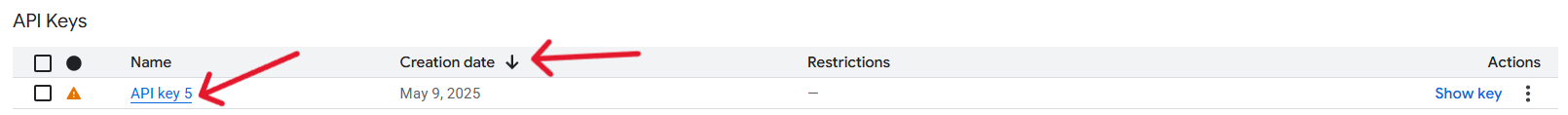

Navigate to Google Cloud Platform

Sign in or create an account

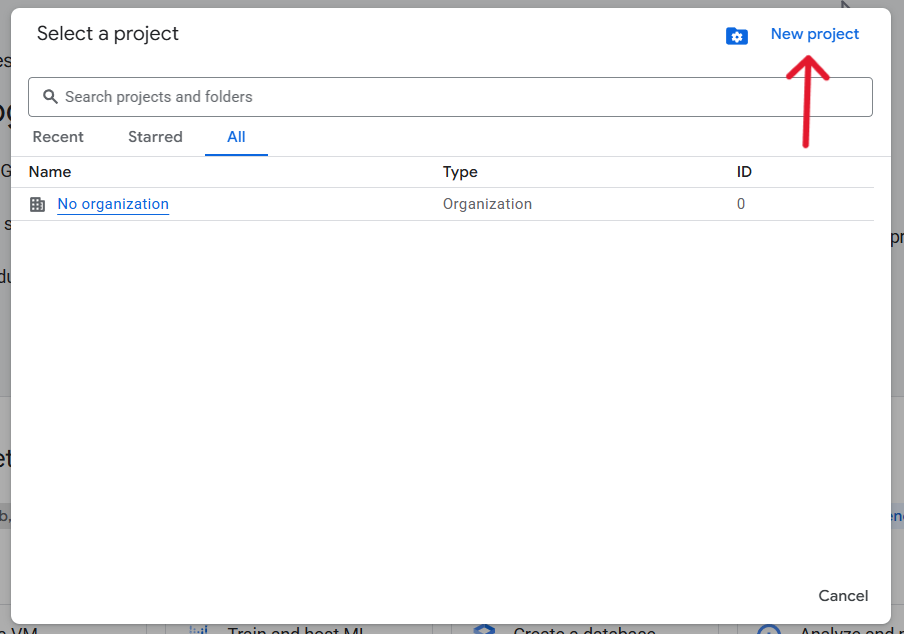

Click on “Select a project” at top left

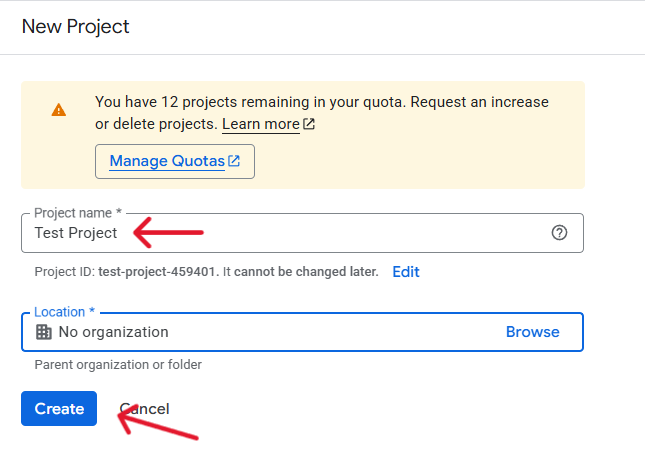

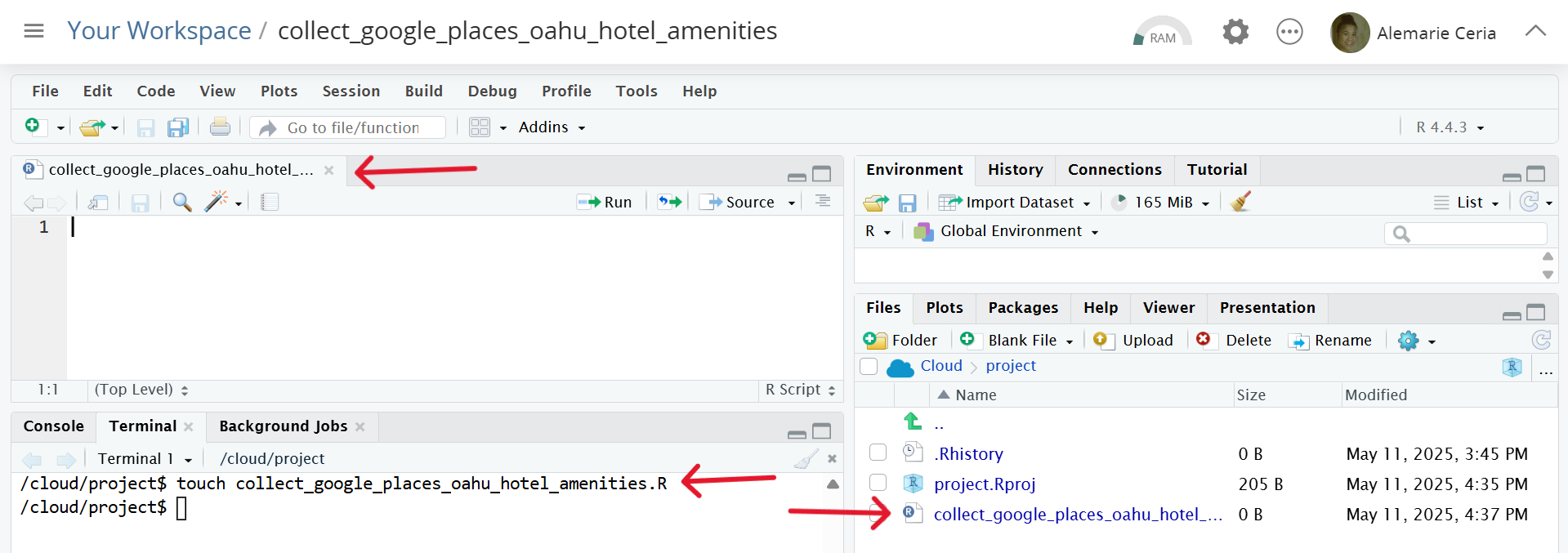

Name your project “collect_google_places_oahu_hotel_amenities”

File Naming Conventions:

.gitignore file by copying and running the following code in your terminal

# Returns: List with the following elements: places (nested list), `nextPageToken` string if there are more results on next page or NULL if all results have been returned

# `request_params`: list containing the JSON body parameters

# `field_mask`: comma-separated string for X-Goog-FieldMask header

fetch_page <- function(request_params, field_mask) {

response <- POST(

url = ENDPOINT,

add_headers(

`Content-Type` = "application/json",

`X-Goog-Api-Key` = Sys.getenv(API_KEY_ENV),

`X-Goog-FieldMask` = field_mask

),

body = toJSON(request_params, auto_unbox = TRUE)

)

# Validate HTTP status

if (status_code(response) < 200 || status_code(response) >= 300) {

err <- content(response, "parsed")$error

stop(sprintf("HTTP [%d]: %s", status_code(response), err$message))

}

data <- content(response, "parsed")

if (!is.null(data$error)) {

stop("API error: ", data$error$message)

}

list(

places = data$places,

nextPageToken = data$nextPageToken

)

}# Returns: Data frame of flattened results

# `text_query`: string, e.g. "hotels and resorts in Oahu, Hawaii"

# `included_type`: optional string to filter by type ("hotel", "resort", etc.)

# `buffer`: optional list for locationBias (circle or rectangle)

get_places_text_search <- function(

text_query,

included_type = NULL,

buffer = NULL

) {

# a) Base parameters for every request

common_params <- list(

textQuery = text_query,

strictTypeFiltering = TRUE,

includePureServiceAreaBusinesses = FALSE

)

if (!is.null(included_type)) common_params$includedType <- included_type

if (!is.null(buffer)) common_params$locationBias <- buffer

# b) Fields to retrieve

fields <- c(

# Identification

"places.name", "places.id", "places.displayName.text",

# Location

"places.location", "places.formattedAddress",

"places.shortFormattedAddress",

# Website

"places.googleMapsUri", "places.websiteUri",

# Category

"places.types", "places.primaryTypeDisplayName.text",

"places.dineIn",

# Summaries

"places.generativeSummary.overview.text",

"places.editorialSummary.text",

"places.reviewSummary.text.text",

# Pagination

"nextPageToken"

)

field_mask <- make_field_mask(fields)

# c) Initialize storage for all pages

all_results <- list()

next_page_token <- NULL

# d) Fetch each page in turn

for (page_index in seq_len(MAX_PAGES)) {

message(sprintf("Retrieving page %d...", page_index))

# Prepare parameters for this page:

# Start with the base parameters and add pagination settings

page_request <- common_params

page_request$pageSize <- PAGE_SIZE

if (!is.null(next_page_token)) {

page_request$pageToken <- next_page_token

}

# Call the API for this page

page_response <- fetch_page(page_request, field_mask)

# Append the retrieved places to our full list

all_results <- c(all_results, page_response$places)

# Check if there is another page to fetch

next_page_token <- page_response$nextPageToken

if (is.null(next_page_token)) {

message("All pages retrieved.")

break

}

# Pause briefly before requesting the next page

Sys.sleep(2)

}

# e) Convert the list of place entries into a flat data.frame

results_df <- fromJSON(toJSON(list(places = all_results)), flatten = TRUE)$places

message(sprintf("Fetched %d total places.", nrow(results_df)))

results_df

}